Image Sensor and Image Processing Basics

time2019/08/30

- A digital camera has almost the same structure as that of a conventional (analog) camera, but the difference is that a digital camera comes equipped with an image senso(CCD or CMOS).

Image processing basics

Image processing refers to the ability to capture objects on a two-dimensional plane. This has led to image processing being widely used in automated inspections as an alternative to visual inspections. This section introduces CCD (pixel) sensors—the foundation of image processing—and image processing basics.

Image sensor

A digital camera has almost the same structure as that of a conventional (analog) camera, but the difference is that a digital camera comes equipped with an image sensor called a CCD or CMOS. The image sensor is similar to the film in a conventional camera and captures images as digital information, but how does it convert images into digital signals?

When taking a picture with a camera, the light reflected from the target is transmitted through the lens, forming an image on the sensor. When a pixel on the sensor receives the light, an electric charge corresponding to the light intensity is generated. The electric charge is converted into an electric signal to obtain the light intensity (concentration value) received by each pixel.

Use of pixel data for image processing

Individual pixel data (In the case of a standard black-and-white camera)

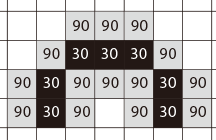

In many vision sensors, each pixel transfers data in 256 levels (8 bit) according to the light intensity. In monochrome (black & white) processing, black is considered to be “0” and white is considered to be “255”, which allows the light intensity received by each pixel to be converted into numerical data This means that all pixels of a CCD have a value between 0 (black) and 255 (white). For example, gray that contains white and black, exactly half and half, is converted into “127”.

An image is a collection of 256-level data

Image data captured with a CCD is a collection of pixel data that make up the CCD, and the pixel data is reproduced as a 256-level contrast data.

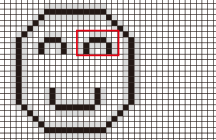

Raw image

When the image on the left is represented with 2500 pixels

The eye is enlarged and represented as 256-level data

As in the example above, image data is represented with values between 0 and 255 levels per pixel. Image processing is processing that finds features on an image by calculating the numerical data per pixel with a variety of calculation methods as shown below.

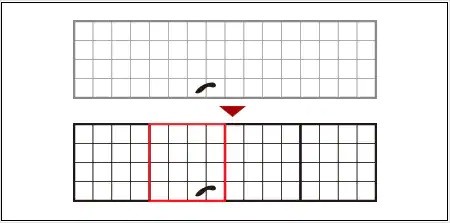

Example:Stain / Defect inspection

The inspection area is divided into small areas called segments and the average intensity data (0 to 255) in the segment is compared with that of the surrounding area. As a result of the comparison, spots with more than a specified difference in intensity are detected as stains or defects.

The average intensity of a segment (4 pixels x 4 pixels) is compared with that of the surrounding area. Stains are detected in the red segment in the above example.